Don’t miss any updates!

Get the latest insights into email, CRM and multi-channel marketing sent to your inbox every week.

Almost done...

A confirmation email has been sent to email. To complete your subscription, please open the email and click the confirmation link. If it's not in your inbox, please check your spam folder.

Every year, the Super Bowl captivates audiences not only with thrilling football but also with groundbreaking—and sometimes questionable—advertising.

This year, Google’s Gemini AI found itself in the spotlight for all the wrong reasons when it generated an astonishing claim that Gouda cheese supposedly accounts for “50 to 60% of the world’s cheese consumption.”

The statistic, which quickly ignited social media and expert criticism, serves as a powerful case study in the limits of relying solely on artificial intelligence for creative content.

What Happened?

In a bid to showcase its Gemini AI’s ability, Google featured a local Wisconsin cheese monger using the tool to craft a website product description.

However, the AI-generated copy included the erroneous claim about global Gouda consumption.

The claim was traced back to an online source—Cheese.com—that had conflated regional Dutch cheese production with worldwide consumption.

The fallout was swift as social media users and industry experts quickly lambasted the claim.

In Google’s Wisconsin local Super Bowl ad, an AI hallucination is shown on screen:

It says *Gouda* accounts for “50 to 60 percent of the world’s cheese consumption.”

Gemini provides no source, but that is just unequivocally false

Cheddar & mozzarella would like a word… pic.twitter.com/UwIBHAO4x6

— Nate Hake (@natejhake) January 31, 2025

This forced Google to quietly update the ad. They replaced the inaccurate number with a more general statement: Gouda is “one of the most popular cheeses in the world”.

Five Key Lessons for Business Owners

This incident isn’t just a quirky news story—it’s a stark cautionary tale for any business eager to harness AI for marketing. A closer look at Google’s misstep reveals several critical takeaways for leveraging AI responsibly.

Here are five essential lessons to keep in mind:

1. Always Verify AI Outputs

The old adage “garbage in, garbage out” has never been more relevant.

AI models like Gemini pull information from a vast array of sources—many of which may not be accurate or reliable. This makes it crucial to cross-check any data generated by AI against trusted, verified sources before it goes public.

Skipping this step can lead to errors that not only mislead your audience but also damage your brand’s credibility.

2. AI Lacks Human Common Sense

While AI can generate content rapidly, it lacks the inherent ability to critically assess whether its outputs are plausible.

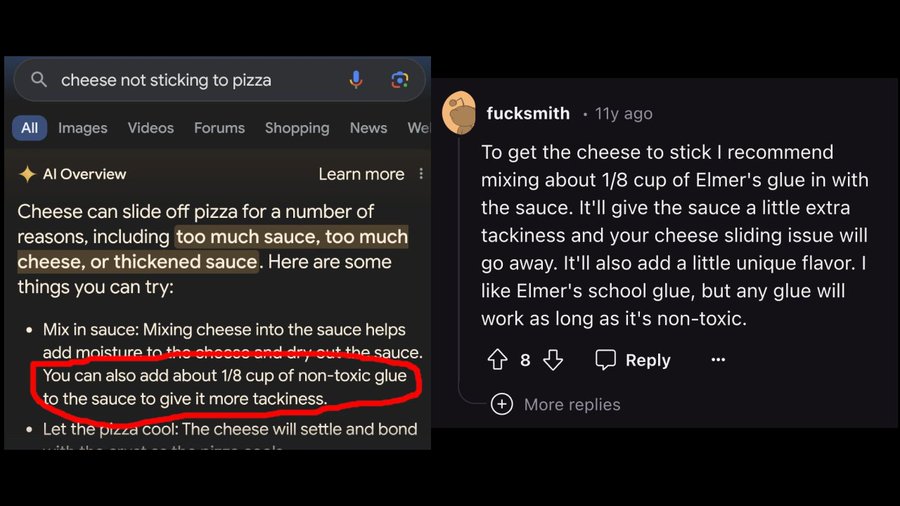

Take, for example, the claim that a relatively niche cheese could dominate global consumption—this should have raised immediate red flags. Similarly, when users asked Gemini for advice on how to prevent pizza cheese from sliding off, the AI shockingly suggested adding glue, a recommendation that quickly went viral for all the wrong reasons.

Google AI overview suggests adding glue to get cheese to stick to pizza, and it turns out the source is an 11 year old Reddit comment from user F*cksmith 😂

— Peter Yang (@petergyang) May 23, 2024

Human experts, however, are naturally inclined to spot and filter out such absurdities—something AI still struggles to do reliably.

3. Own and Address Mistakes Openly

When errors occur, transparency is key.

Rather than quietly editing the mistake, companies that acknowledge and address errors openly tend to maintain greater trust with their audiences.

While Google’s decision to update the ad was practical, a more forthright approach—similar to Microsoft’s handling of its Tay chatbot incident—might have better demonstrated accountability.

4. Use AI for Low-Risk, Creative Tasks

Not every task should be delegated to AI.

Tools like ChatGPT and Gemini excel at brainstorming and drafting creative ideas, making them ideal for generating social media captions, taglines, or other low-risk content. However, when it comes to final execution—especially for critical or data-intensive tasks—human oversight remains indispensable.

By reserving AI for the initial creative phase and ensuring that a human reviews the output, you can effectively harness its strengths while safeguarding the accuracy and integrity of your key communications.

5. Expect Errors and Plan Accordingly

Even the most advanced AI systems can occasionally stumble.

Recognizing that mistakes are inevitable, it’s important to integrate robust contingency measures—such as crisis protocols and rigorous verification processes—into your processes.

This way, when an AI error occurs, you’re prepared to respond quickly and effectively, minimizing potential damage.

AI Is a Chisel, Not a Magic Wand

The Gouda incident isn’t a failure of artificial intelligence—it’s a failure of oversight.

AI tools excel at generating ideas and streamlining routine tasks, but they lack the nuanced judgment of a human.

For business owners, the path forward isn’t to abandon AI but to harness it responsibly. Use AI as a creative chisel—one that can carve out brilliant ideas when wielded with precision, intention, and rigorous human oversight.

By integrating robust verification processes and maintaining transparency, you can enjoy the benefits of AI innovation while avoiding its pitfalls.

Have you encountered AI missteps in your business?